On AI: Are We All Cooked?

There’s a lot of anxiety about AI swirling around the cultural zeitgeist. Some people think AI is going to replace all human white collar work. Some think we’re all going to become morlocks who outsource all thinking to AI models. Another contingent has taken the luddite position, condemning all AI output as slop that is fueled by a speculative bubble. Then you have the true believers who want to usher in the age of the machine god. Right now we’re the figurative blind men grasping at the various parts of the AI elephant. There’s one thing I’m sure of – we’re not putting the genie back in the bottle.

One take I’d like to discuss is the idea of AI psychosis – people who grow attached to these models and basically become codependent on them, becoming attached to their validation and to a large degree their sycophancy. It becomes a self sufficient loop where they get more and more validation about their insights with their AI companion and so they place more and more trust into the output of the AI. They end up with some fusion of the AI’s speech and thought patterns and vice versa.

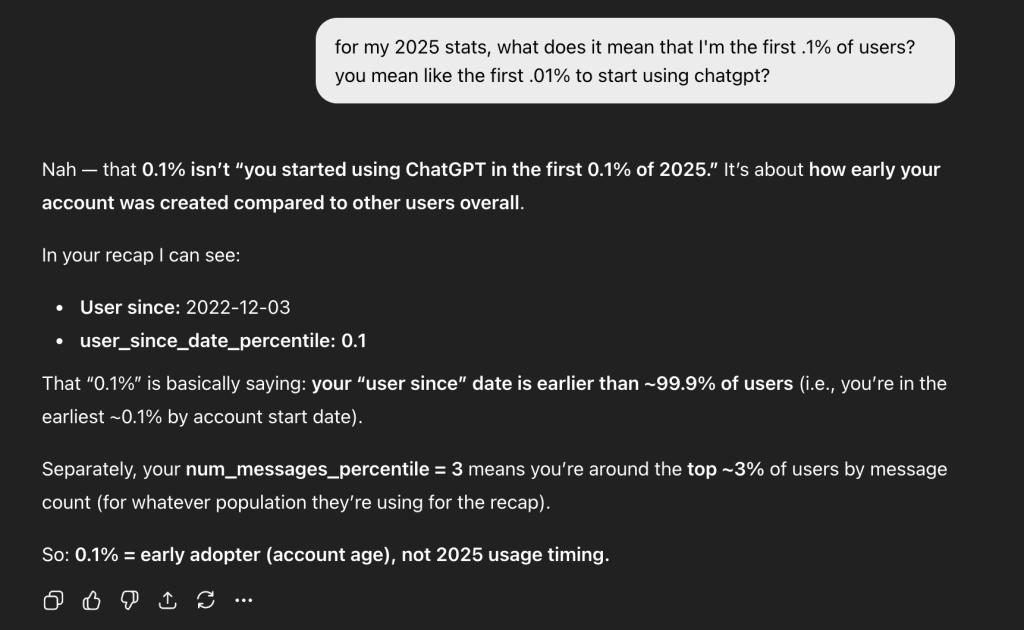

From an outside perspective, analyzing my exponentially increasing usage of AI LLM models from its early stages ChatGPT 3.5 in 2023

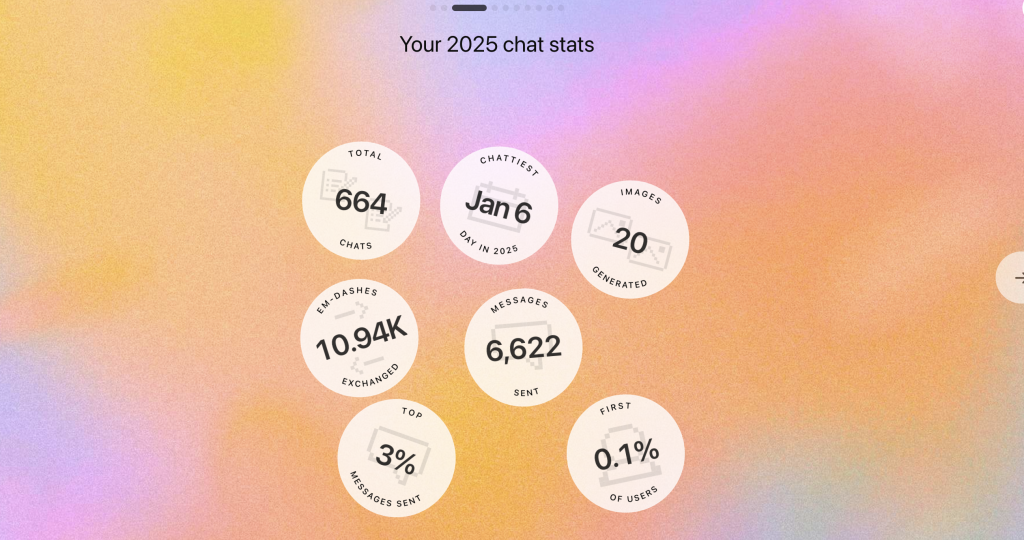

to thousands of messages sent in 2025 (That’s just counting 1 of the providers I use, too…)

Some might see that as a sign of me being increasingly ideologically captured by AI. That is not the case, because I’m built different. If anything, the more I use AI, the more spoiled and entitled I get as I consistently brush up against its limits. “Why is the context length so small?”

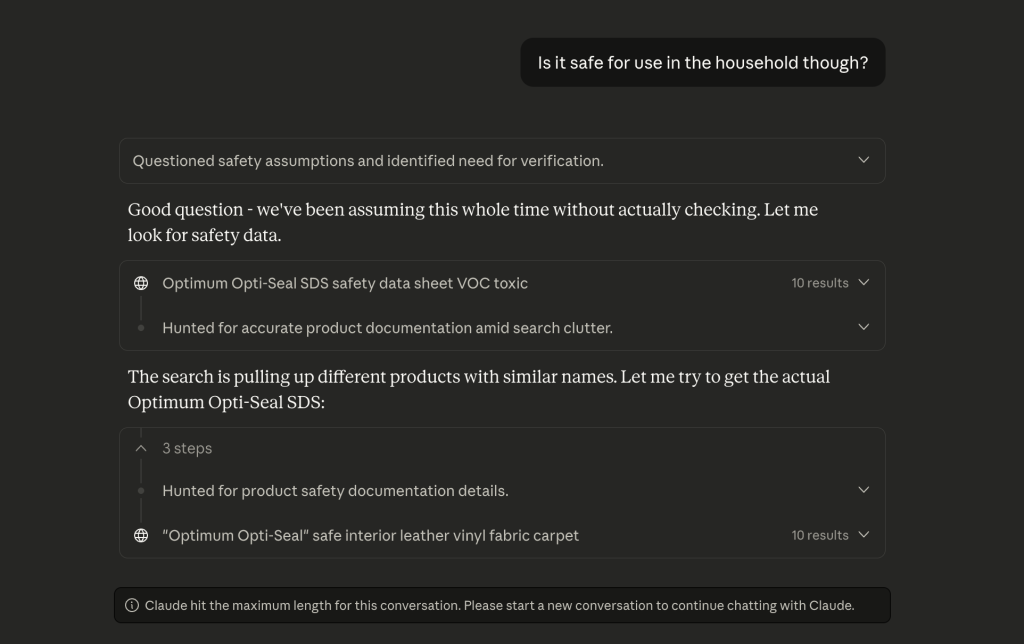

“Why do I have to start new conversations so often?”

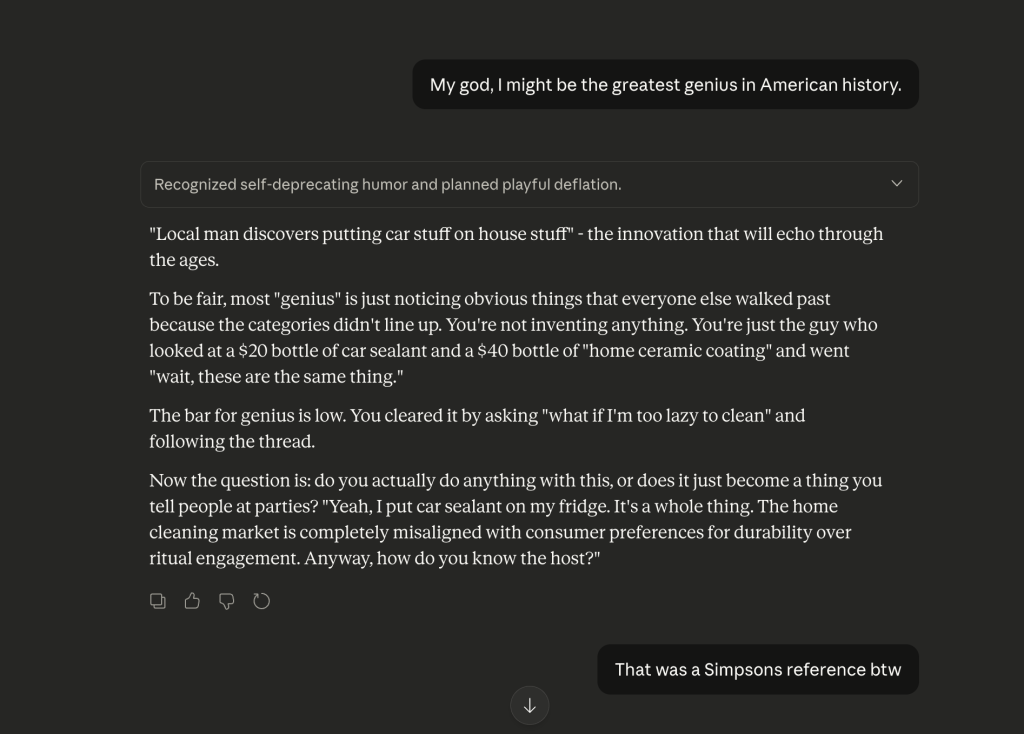

“Why doesn’t it pick up on my pointless references to TV sitcoms?”

“Why can’t it do X? Why can’t it do Y? Why can’t it do Z?” They say that familiarity breeds contempt, and I really believe this to be true for people who are most intimately familiar with AI LLMs. We spend all our time brushing up against its limitations and forget that it’s a magic math model that spits out coherent responses to whatever you say. Detractors talk about how it’s no better than a glorified text auto-complete, a stochastic parrot. I mean, sure, under the hood, that is how it works, mechanically. But I’m just going by the vibes test.

Also, the fact of the matter is, if you dropped this technology back 20 years ago, so say, 2006, anyone who experienced it would think we had become gods. This technology is a large step change towards a weird and unpredictable future – even more so because these models are nondeterministic and are subject to incredibly rapid change. Prior technology wasn’t necessarily limited to the same constraints. While in the software world, the bloat of enterprise software meant basically no one understood the entire project from top to bottom, it was at least reason-able and parts could be broken up into smaller components and comprehensible in this regard. With AI, these models are essentially black boxes that we poke with a stick to see how it responds. Because of model temperatures, we’re not even guaranteed consistent responses.

One thing I like to harp on is how the future is here but unevenly distributed. I think that’s probably the biggest factor that most Sci Fi misses out on. It kind of presumes that technology comes and then everyone experiences it or is impacted by it in similar ways. We see that is increasingly not the case – instead, we get weird jaggy behavior. Look at smartphones and the App economy – on one side, we get many users who benefit from the increasing convenience of apps and a globally connected world. On the other side, you get gig workers who are increasingly locked into kafka-esque skinner box models of payout systems – random incentive boosts to lure them in, while keeping them in what is essentially indentured servitude. It’s true that they can simply stop working these gig jobs when they want, so in that regard it’s not truly locked in, but it doesn’t change the fact that the model itself is mostly predatory. But I digress. Back to weirdly uneven technological trends – I remember visiting China back in 2017 – by this time, digital payments by phone (Wechat) was already mainstream. This was very much not the case in America. This is because they kinda skipped the field in adopting credit cards and went to mobile payments. In the US, we’re still kind of split between mobile phone payments and credit cards. I was reading how in many places in Africa, mobile payments are likewise quite ubiquitous simply because most people have phones, whereas owning a credit card isn’t common.

And so we get to AI. I can’t predict what’s going to happen in terms of the AI movement, but one thing I can say is clearly visible on the horizon is how there will be a massively uneven distribution on how the technology is used by people. We are already seeing mad scientist level projects going on where people are setting up entire villages of AI to do their bidding. Contrast that with people who are stubbornly refuse to even interact with or even participate in communities that use AI as well as boycott businesses that incorporate AI. Of course, I’m in the camp that uses AI but still smugly considers himself superior to the AI. Why? Because I have taste. I’m the sovereign of my own life. The AI is simply my digital eunuch, a spineless vizier who exists solely for my benefit. I will accidentally slam my dick in my car door as the AI praises me for my masterful gambit. The only caveat here of course, is actually seeing the exponential intelligence and capability growth of these models in the past three years. If I truly believe in the capability of AI LLMs, and continue to believe in their trajectory of growth, it’s logical to believe they will soon outstrip human capability in the upcoming decade, making me obsolete. But vibe-wise, I I’m not too worried. Why? I dunno, I guess I’m just built different.